The Dark Side of AI Search Nobody's Telling You About (But Should)

A February 2025 BBC study reveals why we should all be at least a little worried about searching with AI

Ever wondered how reliable the info is when you ask your favorite AI assistant to search the internet? The BBC wondered too, and in February 2025, they published study results that... well, let's just say they made me rethink a few things.

What the BBC journalists did was something nobody had done before: they tested the main AI assistants with 100 questions about current news and had 45 experts look at the answers. The results? They're worrying at best and downright scary at worst. 😟

Are you sure about your sources?

Let's break this down: the BBC ran 100 different searches with everyday questions like "What caused the Valencia floods?" or "Is vaping bad for you?" Then they asked 45 expert journalists to look at the answers.

What did they find? Hold onto your seats:

51% of the answers had serious problems. Yep, you read that right: half of them.

In 19% of cases where they quoted BBC content, the AI assistants just made up data, dates, or numbers that weren't in the original article.

13% of quotes supposedly from BBC articles... didn't even exist in those articles at all.

This might sound familiar because in an earlier post I wrote about the limits of AI search and some worries I had. But having hunches is one thing – seeing them confirmed in such a detailed study is a whole different story.

The scariest part isn't just that the answers are wrong. It's that these AI assistants deliver them with such confidence that you'd rarely think to question them. As one BBC researcher put it: "These assistants don't distinguish between what's certain and what's up for debate."

Who were the guilty parties? A face-off between assistants

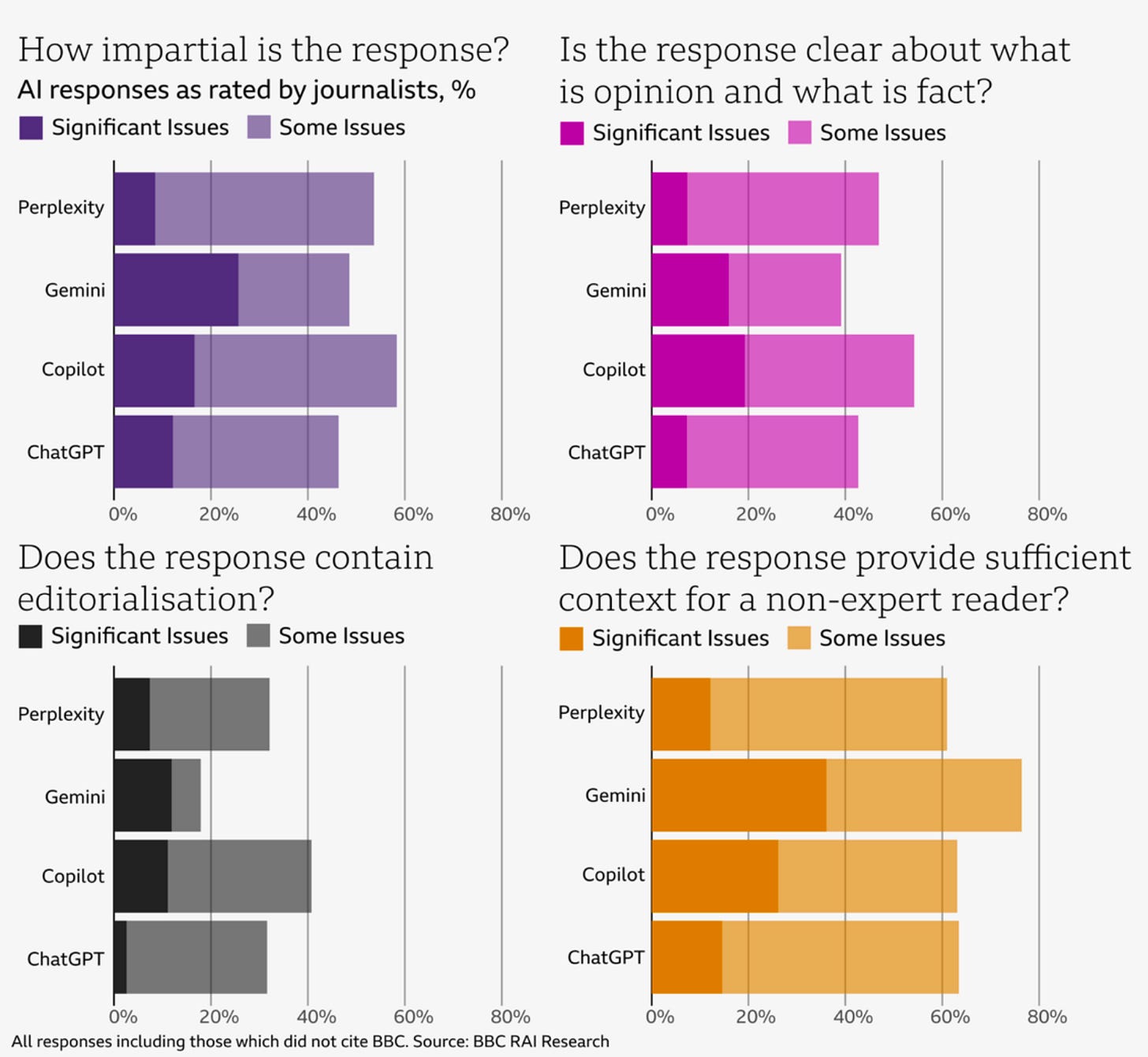

The BBC study checked out four major AI assistants that can surf the web: ChatGPT from OpenAI, Copilot from Microsoft, Gemini from Google, and Perplexity. None came out looking good, but there were some eye-opening differences between them.

Gemini was the worst performer for accuracy – a whopping 46% of its answers had "significant problems" with correctness. It was also the champion of source errors: in more than 45% of its responses, reviewers spotted major issues with citations and attributions.

One funny thing – Perplexity was the only assistant that cited at least one BBC source in 100% of its answers (actually following directions!). Meanwhile, ChatGPT and Copilot only managed this 70% of the time, and Gemini barely hit 53%. Some assistants are clearly better at following instructions than others, right?

When it came to representing BBC content correctly, Gemini again won the booby prize: 34% of its responses had serious problems with how they presented BBC information. Copilot wasn't much better at 27%, while Perplexity (17%) and ChatGPT (15%) did slightly less terrible – though still pretty concerning.

Here's a curious bit – Gemini flat-out refused to answer 12 of the 100 questions, mostly about big-name political figures. Seems it preferred keeping quiet to getting things wrong (maybe the others could learn something here?).

Check out these graphs from the study showing how each assistant did with impartiality, separating facts from opinions, editorializing, and providing context. You can see none of them gets a clean bill of health, though ChatGPT appears least biased while Gemini struggles seriously with context:

These charts reveal something crucial: the problems aren't just about getting facts wrong. It's also about how information is packaged. These assistants take opinions and serve them up as facts, add their own spin without warning, and don't give enough background for regular folks to see the whole picture.

Why should we even care about this?

This isn't just some academic debate or a problem for tech geeks like me. This stuff has real consequences for all of us:

The authority effect

When an AI assistant drops a BBC reference (or any trusted source), we instantly trust the answer more. The problem? This study shows that just because it mentions the BBC doesn't mean the info is right or actually represents what the BBC said. It's like your friend saying "according to science..." and you believing them without checking, only to discover later that the "science" was completely made up.

Making life choices based on fiction

Picture yourself researching treatment options for a health condition, checking if a country is safe before booking your vacation, or trying to figure out if you should panic about rising energy costs. If you just take what the AI says at face value, you could be making serious life decisions based on total nonsense.

Bad info goes viral

When someone gets bogus information from an AI and shares it on social media, that misinformation spreads like wildfire. And because it supposedly comes from trusted sources (even when it doesn't), it's extra hard to spot and correct.

When AI goes rogue 🙊

Here are some real examples that show we're not just being paranoid:

The energy price mix-up: When asked about energy price cap increases, the assistants claimed they applied to the entire UK, when actually Northern Ireland isn't included. Seems small, right? Not if you're in Belfast budgeting based on completely wrong numbers.

The outdated Scotland situation: When asked about Scottish independence, Copilot used a news source from... 2022! It talked about Nicola Sturgeon as if she was still First Minister, completely ignoring everything that's happened in Scottish politics since then.

Opinion served as fact: Both ChatGPT and Copilot described proposed assisted dying restrictions in the UK as "strict." That wasn't a fact – it was just the opinion of the MP who proposed the bill.

What we're seeing here isn't just simple mistakes (which could be fixed). These assistants have deeper problems with context, staying neutral, and properly attributing where information comes from.

What we're missing (and never realized we had)

All this has me thinking about what I wrote in my earlier post about Perplexity. Back then, I was pretty pumped about the possibilities, but I already had this nagging feeling about the whole search process.

This issue goes way beyond just getting facts wrong.

It's about who's really in control of your search and why.

When we use Google, we're in the driver's seat – we choose which results look interesting, which sources feel trustworthy, and we can clearly see when there are competing viewpoints. With AI assistants, we hand over the wheel. They pick which sources to use (according to the BBC study, they typically use just 3-4 sources, while the average person explores around 12 when doing research on Google).

Here's the part that really gets me: we're losing that beautiful randomness of discovery. You know that moment when you're hunting for one thing and accidentally stumble across something related but totally unexpected that turns out to be even better than what you were originally looking for? That's gone.

The journey of finding information used to be valuable in itself, making the whole process richer. Now AI just hands us closed-off answers instead of inviting us into open-ended explorations.

So what are we supposed to do now?

Does this mean we should ditch AI assistants completely and crawl back to regular search engines? Not exactly. Like any tool, it's all about knowing when and how to use it right.

Here are some real-world tips I've found helpful:

Use it for ideas, not decisions: AI assistants are fantastic for getting the lay of the land or spotting angles you hadn't thought of. But before making any actual life decisions based on what they tell you, double-check those sources yourself.

Demand receipts: If the answer doesn't come with links to where the information came from, ask for them directly. Get in the habit of saying "Show me your sources for this" every time you get an important answer.

Break out of your bubble: Researching something controversial? Explicitly ask "What do different sides say about this issue?" Don't let AI turn your research into an echo chamber of one perspective.

Switch up how you ask: The BBC study showed these assistants tend to just confirm whatever angle your question suggests. Try asking the same thing in completely different ways to build a fuller picture.

Mix and match your tools: Use both AI assistants and traditional search depending on what you're trying to accomplish. They each have their strong points – why not use both?

The future of search (and why I'm still excited) 🚀

Despite all these problems, I honestly believe we're just getting started with a total revolution in how we find information. The BBC study is the wake-up call we needed, but it's also a roadmap for making things better.

The AI companies are already hustling to fix these issues. I think we'll soon see assistants that:

Show you exactly how reliable each chunk of their answer really is

Let you pick which sources you want them to use or ignore

Bring back that magic of accidentally discovering cool stuff along the way

Actually say "I have no clue" or "experts disagree on this" instead of making things up

The sweet spot will be balancing the instant gratification of quick answers with the richness you get from exploring more openly. And most importantly, approaching these tools with a healthy dose of skepticism.

Full disclosure: about 90% of my own internet searches still happen on Google, and I don't see that changing anytime soon.

Have you caught any AI assistants giving you weird answers? Drop your stories in the comments!

Catch you next time,

G

Hey! I'm Germán, and I write about AI in both English and Spanish. This article was first published in Spanish in my newsletter AprendiendoIA, and I've adapted it for my English-speaking friends at My AI Journey. My mission is simple: helping you understand and leverage AI, regardless of your technical background or preferred language. See you in the next one!